Cane You See It? Why This Matters

For the blind and visually impaired (BVIP), navigating the world is a daily act of courage. Streets, pavements, and public spaces are filled with moving hazards, pedestrians weaving through crowds, cyclists appearing from blind spots, vehicles pulling out unexpectedly. Each journey requires constant vigilance and split-second decisions.

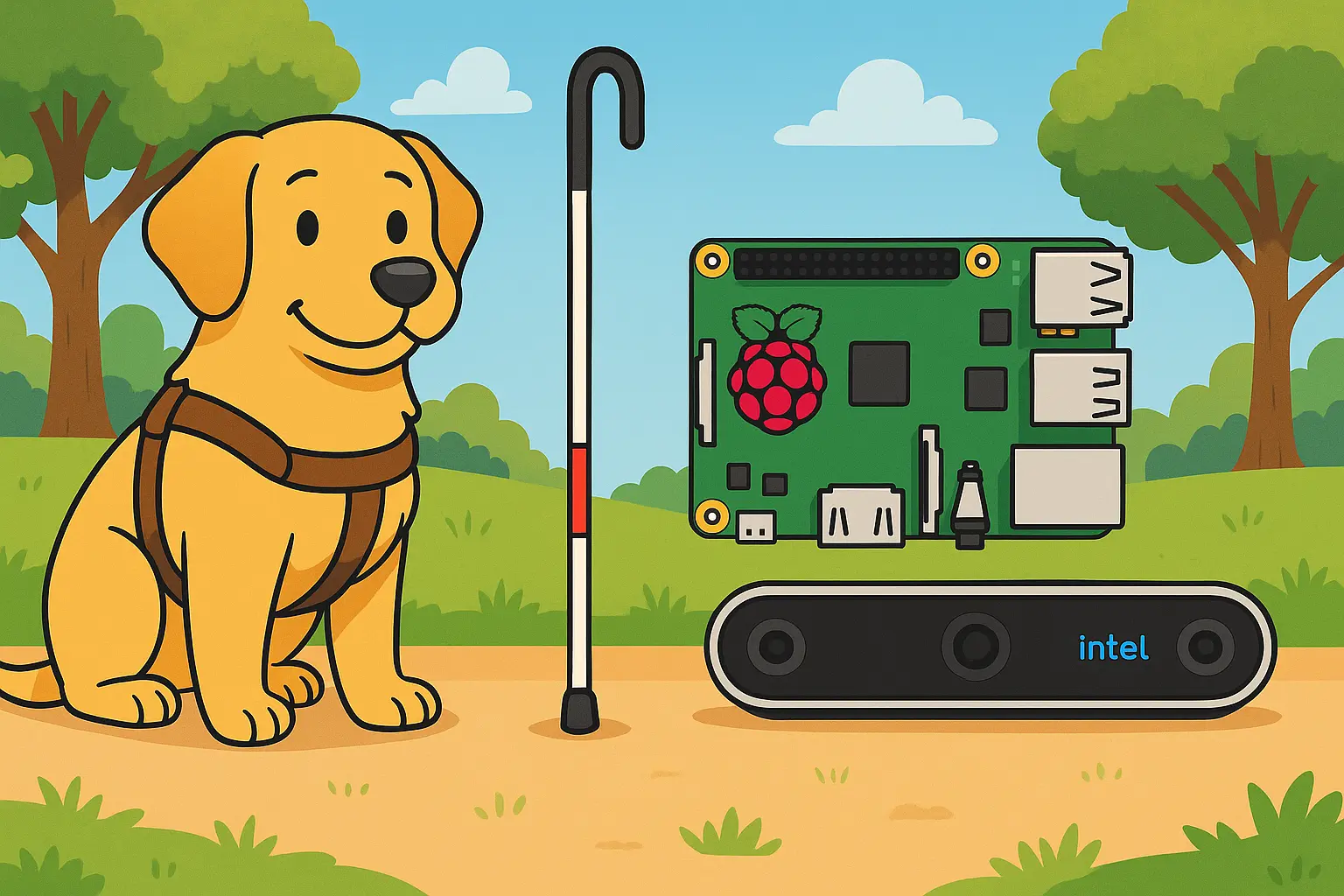

Traditional aids like the white cane and guide dogs are lifelines, but they come with inherent limitations:

- Detection range rarely exceeds arm's length.

- Head-level obstacles remain invisible until contact.

- Guide dogs cost upwards of £38,000 to train.

- Waiting lists stretch beyond 18 months.

This project emerged from a simple question, could we combine real-time object detection with depth sensing to create a wearable warning system? One that works offline, runs on affordable hardware, and responds fast enough to make a real difference in keeping users safe.

Double Vision: Two Eyes Are Better Than One

The solution borrows from nature's playbook, redundancy saves lives. Just as our eyes and ears work together to build spatial awareness, this system employs two complementary detection methods:

YOLOv11 Object Detection serves as the intelligent scout, rapidly identifying and classifying people, vehicles, and obstacles with AI-powered precision.

Depth-Based Safety Zone Monitoring acts as the reliable backup, continuously scanning the path ahead for anything that gets too close, regardless of what it is.

Think of it as having both a knowledgeable guide who can tell you "there's a cyclist approaching from the left" and a vigilant guardian who simply says "something's too close, move now." The object detector provides context and classification, while the depth system ensures nothing slips through undetected.

Feeding the Beast: Giving the System Its Sight

Every great system starts with great components. The hardware choices here balance performance, cost, and practicality.

The system's "eyes" are an Intel RealSense D435 stereo depth camera, a compact powerhouse chosen for its millimeter-accurate depth sensing, robust performance across lighting conditions, and form factor suitable for wearable applications.

The "brain" is a Raspberry Pi 5, selected after careful consideration against the Jetson Nano. While the Jetson offers GPU acceleration, the Pi 5 wins on cost-effectiveness (220% cheaper), community support, and significantly improved ARM Cortex-A76 CPU performance over previous generations.

For object recognition, the models leverage the COCO dataset. Not purpose-built for BVIP navigation, but rich with relevant classes including people, cars, bicycles, and street furniture. To optimise for speed, the original 80 classes were systematically reduced to focus only on the most relevant hazards for pedestrian navigation.

YOLO and Behold: The AI at Work

At the heart of the detection system lies YOLOv11, the latest evolution in the "You Only Look Once" family of real-time object detectors. Unlike traditional two-stage detectors that first propose regions then classify them, YOLO processes the entire image in a single pass, perfect for time-critical applications.

Slow and Steady: The Baseline

The journey began with optimism and harsh reality. Initial tests with standard YOLOv11 models on the Pi 5 delivered impressive accuracy but crushing latency. Frame processing times stretched beyond 2 seconds, useless for safety-critical applications where milliseconds matter.

Speed of Sight: Edge Optimisation

The path to real-time performance required surgical precision. Three key optimisations transformed the sluggish baseline into a responsive system:

NCNN Conversion: The models were converted to NCNN format, a high-performance neural network inference framework specifically designed for ARM processors and mobile devices.

Transfer Learning with Class Reduction: Using COCO minitrain as a starting point, the models were fine-tuned on a carefully curated subset focusing only on navigation-relevant objects. Static items that could be detected through depth alone were eliminated.

Resolution Scaling: Input resolution was reduced from (640 x 640) to (320 x 320) pixels, cutting computational complexity by 75% while retaining essential detail for hazard detection.

The YOLOv11-nano variant emerged as the only model capable of hitting the critical 30 FPS target, though this speed came with recall trade-offs. These compromises made the depth-based backup system not just useful, but essential.

The Safety Net

When AI fails, physics prevails. The backup system operates on a beautifully simple principle, if something is too close, warn the user, regardless of what it is.

The system defines a "walking path" directly ahead of the user and continuously samples depth points across three critical zones:

- Imminent Zone (5m): Immediate action required.

- Caution Zone (8m): Prepare to react.

- Safe Zone (10m): Maintain awareness.

These distances were calculated based on average walking speeds and human reaction times to haptic feedback, ensuring users have adequate time to respond to warnings.

Rather than attempting full RGB-depth frame alignment (computationally prohibitive), the system employs selective depth sampling. It queries depth values only at key points, the centroids of detected objects and a sparse 5-point grid within the walking path. This approach keeps processing well under the 33-millisecond per-frame budget while maintaining effective coverage.

Brains vs Brawn: AI Meets Raw Depth

The beauty of this hybrid approach lies in complementary strengths covering mutual weaknesses.

The object detector brings intelligence. It can distinguish between a harmless object and a moving cyclist, providing context-rich warnings that help users make informed decisions. However, it can miss objects, especially small or unusually shaped obstacles that don't fit its training patterns.

The depth monitor brings reliability. It doesn't care whether an obstacle is a person, pole, or parked car. If something breaches the safety zones, it triggers an alert. This system is immune to classification errors but lacks the contextual awareness to distinguish between threats and benign objects.

Together, they create a safety net with multiple layers:

- AI provides intelligent, context-aware detection

- Depth ensures nothing gets missed in the critical path ahead

- Combined warnings give users both situational awareness and immediate hazard alerts

The Road Test: Trials and Errors

Real-world testing revealed both the system's capabilities and its limitations. Scenarios ranged from controlled pedestrian crossings to dynamic street environments with multiple moving hazards.

Success Stories: The system consistently detected pedestrians crossing paths, cyclists approaching head-on, and vehicles pulling out of parking spaces. Average performance maintained around 30 FPS with 90% overall accuracy, meeting the critical real-time requirements.

Learning Moments: Thin vertical objects like lampposts and bollards occasionally slipped between depth sample points, causing missed detections. Additionally, depth accuracy degraded beyond the imminent collision range, leading to some false positives in the caution and safe zones.

These findings highlighted the fundamental trade-off between computational efficiency and comprehensive coverage, a challenge that points toward future hardware and algorithmic improvements.

Future Sight: Sharpening the Vision

The current system proves the concept, but the horizon holds exciting possibilities for enhancement:

Hardware Evolution: Migration to more powerful platforms like the Jetson Orin Nano Super would enable larger, more accurate models while supporting full-frame depth analysis for comprehensive coverage.

Neural Depth Estimation: AI-based monocular depth estimation could replace or augment hardware sensors, potentially reducing cost while improving accuracy and range.

Custom Training Data: Purpose-built datasets focusing specifically on BVIP navigation hazards would dramatically improve detection accuracy for relevant objects while reducing false positives.

Clear Path Ahead: From Vision to Reality

This project demonstrates that affordable, offline, real-time hazard detection for BVIP navigation isn't just possible, it's practical. The combination of modern AI techniques with robust hardware creates a system that can genuinely enhance safety and independence.

The key insight is that perfection isn't required; reliability is. By pairing intelligent AI detection with simple but dependable depth monitoring, the system provides multiple layers of protection that work together to keep users informed and safe.

The road ahead is illuminated by rapid advances in edge computing, AI model efficiency, and sensor technology. Each improvement brings us closer to a world where technology truly serves as a bridge between limitation and possibility, where no obstacle goes unseen, and every journey can be made with confidence.

This isn't just about building better assistive technology; it's about creating tools that restore agency, independence, and the fundamental freedom to navigate the world safely.